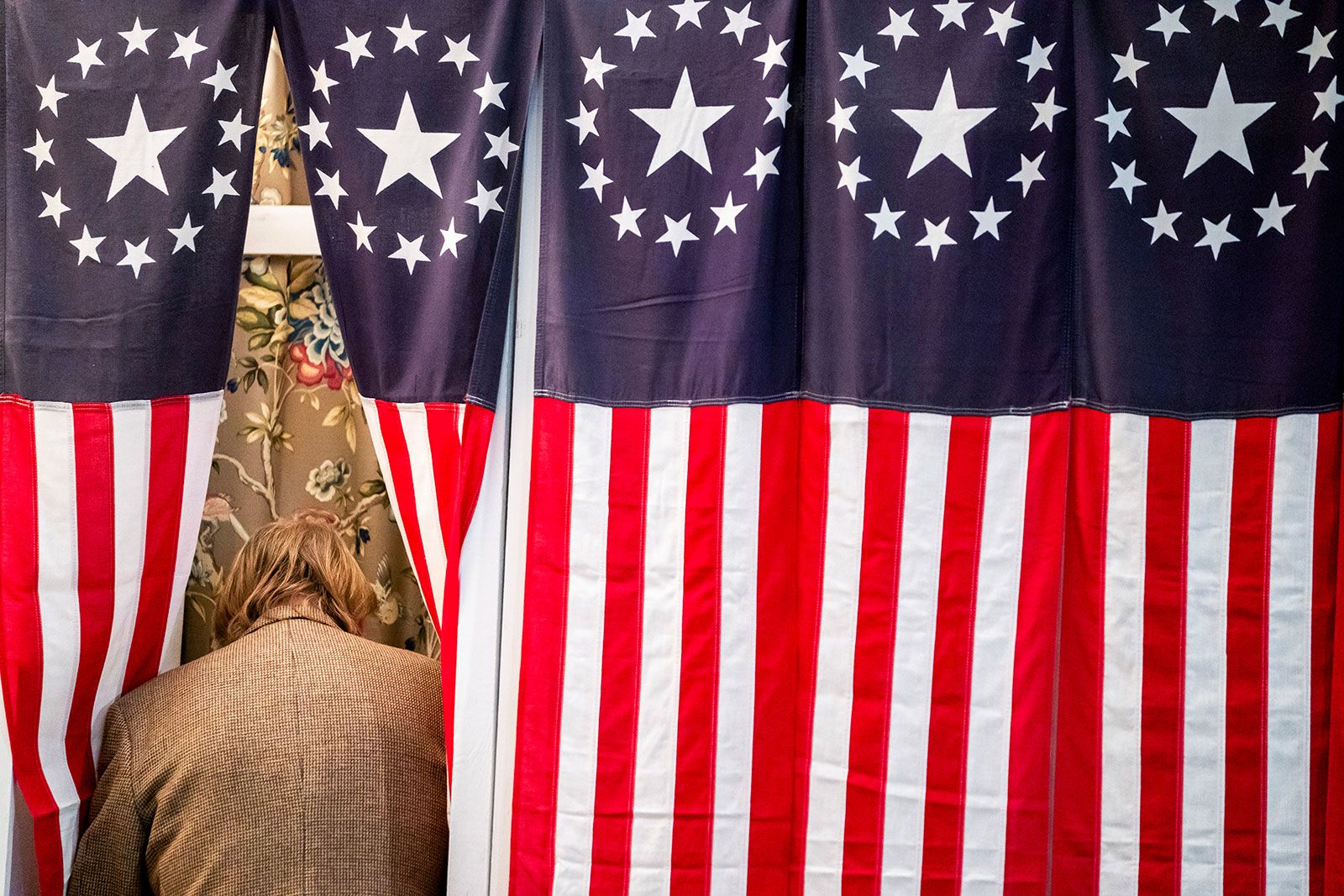

In a disconcerting development from New Hampshire, voters preparing to cast their primary ballots were targeted by robocalls featuring a counterfeit version of President Joe Biden’s voice. The intent was to dissuade Democrats from participating in the primary. The disturbing aspect is not the message itself, but the fact that the audio, while seemingly fraudulent, bears an uncanny resemblance to the President’s voice, even incorporating his signature “malarkey” phrase. The question remains: who is behind these robocalls?

Warnings about the potential use of deepfakes to manipulate US elections have been circulating for years. It appears that this era has now arrived. I had a conversation with Donie O’Sullivan, CNN’s correspondent covering politics and technology, who has been tracking this story for years. Here are some excerpts from our conversation.

The Rise of AI-Generated Disinformation

O’Sullivan anticipates that this incident is likely the first of many similar stories we will encounter this year. He believes we are on the brink of a surge in AI-generated disinformation, with a particular focus on audio deepfakes.

Understanding Deepfakes

A “deepfake” typically refers to a fake video created using artificial intelligence, which appears incredibly realistic. Over recent years, AI has made the creation of fake images, videos, and audio significantly easier. This technology differs fundamentally from traditional methods such as audio splicing or Photoshop, as it involves computers generating the images and audio, resulting in a more realistic outcome.

The Creators of Deepfakes

According to O’Sullivan, anyone and everyone could potentially create convincing deepfakes. While a few years ago, this technology was primarily accessible to nation-states, recent advancements have made it widely available. O’Sullivan provides an example of how he used readily available software to create a fake audio of his voice, which successfully fooled his father into having a full conversation with the AI-generated voice.

The Danger of Deepfakes

While the robocall in question is unlikely to influence an election where Biden isn’t even on the ballot, the ultimate threat lies in the potential misuse of this technology. Deepfakes could be used to create fake tapes that make it sound like politicians have said something incriminating that they didn’t actually say. Although there are checks and balances in place, the speed at which misinformation can spread can still sow doubt in people’s minds.

Advice for the Public

O’Sullivan advises the public to approach everything they see and hear with skepticism, especially online. He emphasizes the importance of being diligent and sourcing information from reliable sources.

Deepfakes and Deniability

The existence of deepfake technology also provides politicians and others with the ability to deny real scenarios, claiming them to be deepfakes. This adds another layer of complexity to the issue.

Expect Deepfakes Everywhere

O’Sullivan warns that because deepfakes are easy to create, they could potentially be used at all levels of politics, not just at the presidential level. This makes them harder to detect and counteract.

Looking Forward

Despite the grim outlook, O’Sullivan believes that as people become more aware of deepfakes, they will also become more skeptical and discerning. He hopes that society is getting better at dealing with this type of disinformation.